The Speed Dilemma

AI’s which outthink and outperform humans in all domains are highly likely to be built in the next few years. Would pausing AI capabilities development decrease existential risk or would a pause pass up an opportunity? Or worse- could slowing down decrease safety? After all, if some actors slow down, the relative position of those who don’t follow the rules would be improved. Anthropic’s CEO, Dario Amodei, says that every decision he makes “feels like it is balanced on the edge of a knife” because of this. “If we don’t build fast enough,” he says, “the authoritarian countries could win, but if we build too fast then [existential risk] could prevail.” I identify one of the cruxes in debate over pausing AI, and argue views will converge upon overcoming this crux.

Background

The media and information environment is confused and perspectives on AI vary wildly. Many don’t think about AI at all. Many more imagine future AI models will continue to be chatbots on their phones. A final group speculates about AI’s which not only answer questions, but out-think humans, execute tasks which take hours, days or months, shape the physical world by controlling computers, physical tools, robots, and interact with the economy. OpenAI, Anthropic, and Google Deepmind have all declared their explicit goal is to build artificial general intelligence, and are all backed by billions of dollars in investments and the brightest talent of our generation.

Recent developments that would have been considered science fiction 5 years ago are now commonplace. For example, SORA, is an AI model which creates any length videos from text. The length of coding tasks frontier systems can complete is growing exponentially, doubling every 7 months, and this trend has been robust for 6 years. Two years ago, GPT-3.5 could complete engineering tasks requiring 1 minute of an engineer's time; today, O1 can complete engineering tasks requiring 30 minutes. Assuming this trend continues, AI models in 2027 will complete tasks requiring one work day (8 hours).

Government officials from D.C. to Beijing have noticed this progress, and are currently preparing for an arms race. The Trump administration has announced 500 billion dollars on a Manhattan Project-style data center.

a video of “an otter using wifi on a plane” from 2022, and one from 2024. AI is progressing fast, not just in video creation.

Worry About AI: The Case for Pausing / Stopping

Concern From Those Closest to AI: In March of 2022, Nobel prize winners, top scientists, CEOs and tech figures from Elon Musk to Steve Wozniak signed an open letter calling for a 6 month pause on all systems more powerful than GPT-4. A few days later, Eliezer Yudkowsky put out a post in Time magazine, arguing that researchers steeped in these issues expect that the obvious result of building a superhumanly smart AI, under anything resembling the current circumstances, is that “literally everyone on earth [will] die.” Social movements and protests which directly advocate for an AI pause are rapidly growing.

Existential Risk: Pause proponents argue that until we are able to make smarter-than-human AIs safe to humans, we should pause building them. Delaying AI could give us more time to build institutions and pursue technologies which would help with the AI alignment problem (making an AIs goals line up with some target set of values). For example, interpretability research seeks to understand AI systems. For example, we can understand why an Furthermore, arms-races introduce competitive pressures and have led AI companies to cut safety testing in order to expedite the development of AI. Helen Toner testified before congress saying that OpenAI insiders are begging to slow down.

Unworried: The Case for Proceeding / Accelerating

Technological Optimism: Advanced AI promises to solve problems (e.g. cancer) and reduce other risks (e.g. help prevent asteroid impacts). Marc Andreeson argues in his trending essay that “We are being lied to… Told that technology is ever on the verge of ruining everything… we are told to be angry, bitter, and resentful.” It is undeniable that technological progress is a large factor responsible for improving humans’ quality of life. It is the force which has eradicated smallpox, almost completely solved human famine and child mortality, and has allowed us to explore space. Previous AI research and model releases have been responsible for automation, economic growth, and research progress. It seems reasonable to expect this trend to continue.

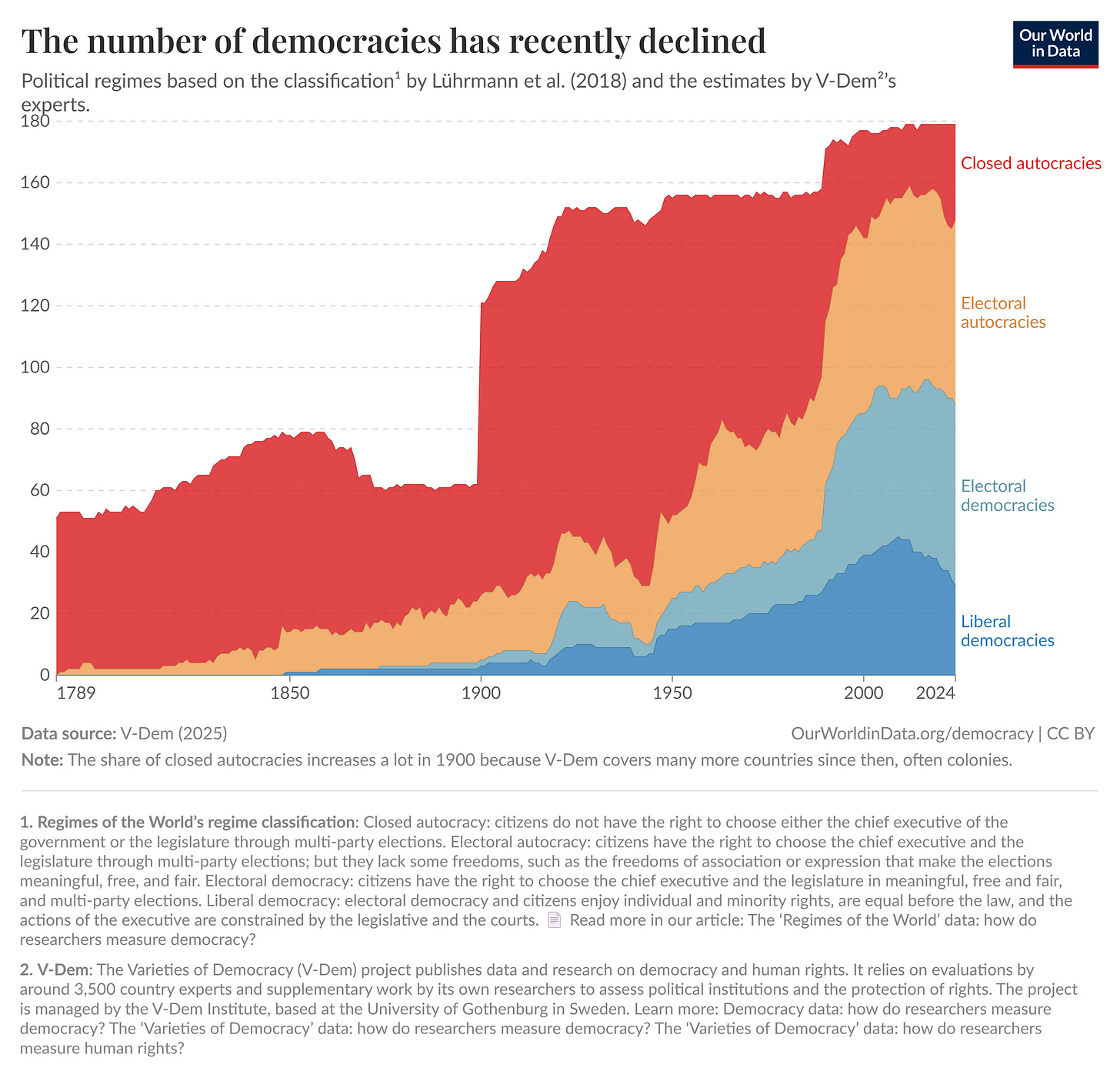

Adversarial Reasons: A moratorium on AI would improve the relative position of adversaries and hurt good actors. For instance, a moratorium on U.S. AI companies would not slow down Chinese AI development. Furthermore, fear of institutional decay, a gradual global shift away from democracy, or adversaries (e.g. China) leap-frogging the U.S. in technological and economic power suggests a finite window for powerful AI to be developed by democracies. Why are people more worried about AI takeover than authoritarian regime takeover? A controlled AGI would be the most powerful weapon in history, and nearly guarantee any dictator permanent control and perfect censorship.

Even locally, a pause might mean more safety-aware companies like OpenAI or Anthropic forfeit their lead to companies which do not even pretend to take alignment seriously (e.g. Meta). OpenAI and Anthropic are very anxious about what they’re building, we should be careful if we choose to forfeit their considerable technological lead.

What to do?

Im still researching what to do about all this. Please like and subscribe for more