Nvidia's Push to Sell America’s Future to China

“In the nuclear era, uranium became the linchpin of atomic power. States that secured it could enforce regulations, negotiate treaties, and limit the spread of destructive capabilities. In the realm of AI, computing resources—especially AI chips—have a similar strategic weight, fueling rivalries and shaping geopolitical calculations.” - Dan Hendryks

Summary

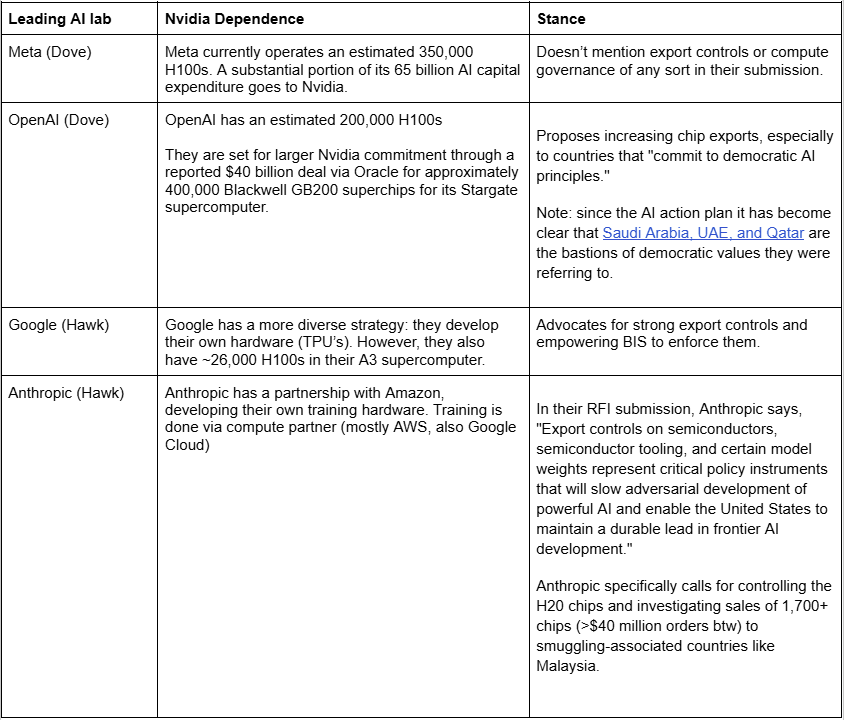

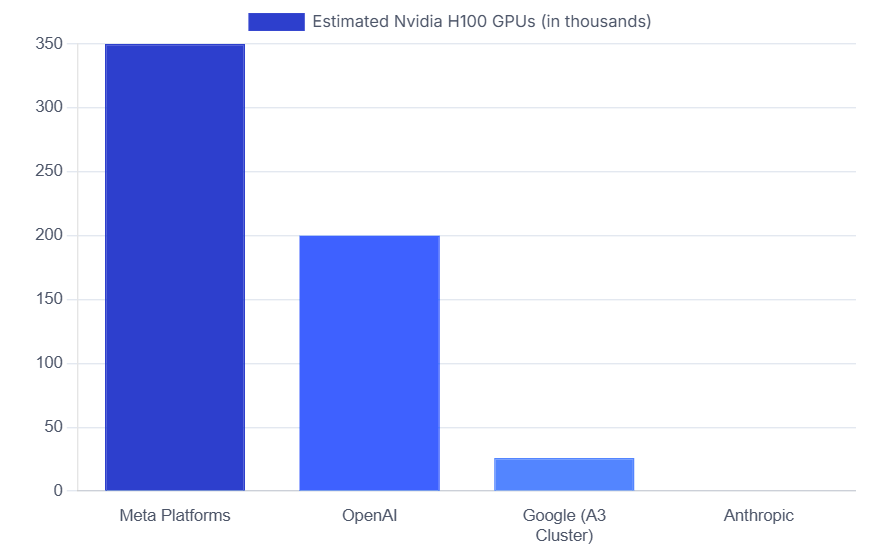

Nvidia publicly opposes export controls protecting America’s leadership and no one in Silicon Valley or D.C. dares call them out. One reason for this is that the very companies that should be sounding the alarm depend so heavily upon their hardware. I’ve looked at a bunch of Trump’s AI Action Plan submissions. The 4 leading AI companies' positions in the recent AI Action Plan align exactly with how dependent they are upon Nvidia. For instance, OpenAI and Meta who rely heavily on Nvidia for their AI experiments have remained relatively silent or opposed export controls. On the other hand, the AI leaders developing their own hardware (Anthropic and Google), stand out as China hawks. The companies most dependent upon Nvidia seem to lose their voice on national security and independently arrive at Nvidia's pro-China position.

This matters because:

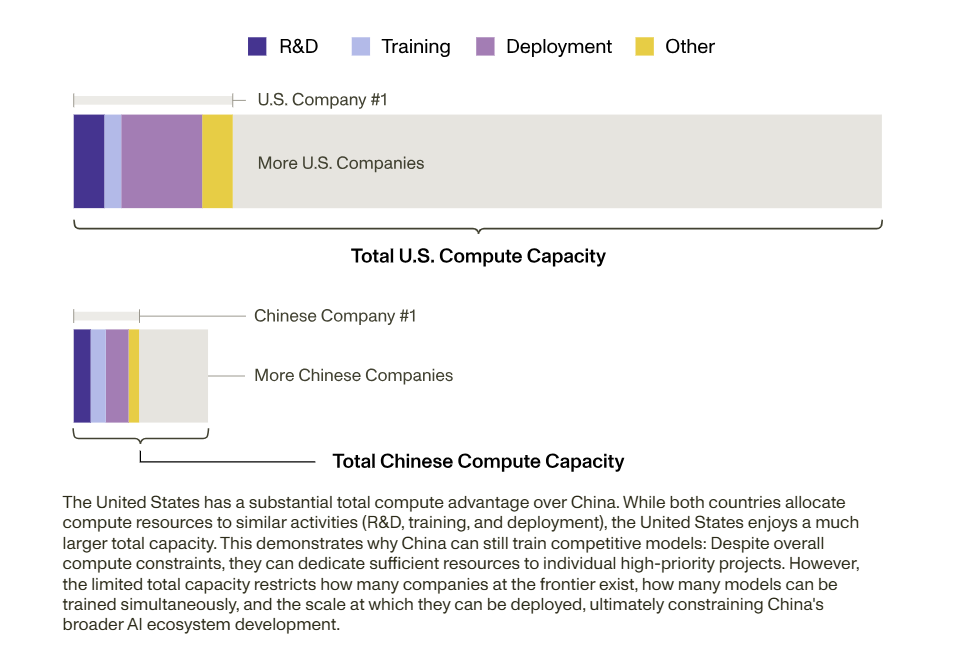

The U.S. currently has 10x more AI compute capacity than China, this is our largest AI advantage

Export controls on AI chips have been our main tool for maintaining this lead

Every chip sold to China erodes the advantage

I’ve found that:

The four leading AI companies' policy positions align exactly with their Nvidia dependence

I learned firsthand at Intel how deep Nvidia’s influence extends. While there, we fought a losing battle against Nvidia's software monopoly. Every major ML framework is built on CUDA, Nvidia's proprietary layer, creating ecosystem lock in

Nvidia has repeatedly circumvented export controls (designing "China-only" chips like the H20 to skirt restrictions)

When Trump finally banned the H20, they responded by lobbying to scrap controls entirely

Context: Western Compute Lead

“Over the past year, the talk of the town has shifted from $10 billion compute clusters to $100 billion clusters to trillion-dollar clusters.” - Leopold Aschenbrenner

The largest AI experiments require billion dollar investments, and thousands of chips optimized for training. AI datacenters contain tens of thousands of Nvidia H200’s, costing ~$30,000 each, and training requires fundamentally different hardware than inference (running the model). This made Nvidia the most valuable company on earth. They design them, but they don’t actually manufacture them, they outsource the actual production to Taiwan. Nvidia/TSMC hardware is a key ingredient in massive AI experiments, largely responsible for the U.S. producing more frontier AI models. There is good evidence that China’s Deepseek was enabled by smuggled Nvidia chips via Malaysia.

The US AI hardware lead. Source: RAND.

AI 2027, the AI forecasting essay read by JD Vance, argues that America wins because of the compute lead but assumes we protect it. America has roughly 10 times more compute capacity than China. As RAND's analysis shows, this compute advantage is our primary moat. Every chip sold erodes that lead.

Even today’s AI helps authoritarian regimes retain power (e.g. surveillance, preventing dissidents from coordination). That’s nothing compared to reaching AGI (artificial general intelligence) first.

Nvidia Has a History of Skirting Export Controls

In August of 2022, the U.S. banned selling high-end chips like the Nvidia H100 to China and Russia. In November 2023, Nvidia announced three chips deliberately engineered to skirt export controls. Reuters broke the story that Nvidia had designed three “China-only” AI accelerators (H20, L20, L2) in response to the U.S. export controls. By lowering the raw compute throughput and PCIe I/O, each device stayed below the compute limits and didn’t require an export license like their flagship AI chips (H100, now H200).

As RAND points out, while H20s "underperform H100s for initial training, they excel at text generation ('sampling')—a fundamental component of advanced reinforcement learning methodologies critical to current frontier model capability advancements"

The Nvidia H20 chip created a government approved pathway for Nvidia to do exactly what the diffusion rule and export controls were trying to prevent. And now, facing an $8 billion revenue hit from the Trump administration's decision to finally restrict H20 sales, Nvidia is advocating for giving up on export controls entirely.

The Hawks and The Doves

The major companies dependent on Nvidia's chips either stayed silent on export controls or opposed them. OpenAI, Anthropic and Google Deepmind are the three leading AI labs producing frontier models. Meta is behind, but has the infrastructure necessary to be a leader.

Nvidia-Dependent Companies are China Doves

*apparently substack doesn’t allow tables, so I have to use a screenshot.

Here is a graph from Gemini:

Other notable hawks:

Patriotic small tech (e.g. Scale AI, Palantir): "China is leading on data, we are tied on algorithms, and the United States remains ahead on compute,” Scale supports stronger export control enforcement and focuses their entire letter on the CCP.

American Think Tanks including: RAND, Georgetown's Center for Security and Emerging Technology, The Center for AI Policy, RAND, The Center for a New American Security, and The Institute for AI Policy and Strategy all position themselves in favor of export controls.

International AI Governance Centers: Organizations like the Oxford Centre for Governance of AI (GovAI) and the Centre for Long-Term Resilience (CLTR) in the UK highlighted compute governance as a priority in mitigating AI risk globally.

Other notable doves:

Other hardware companies (AMD, Qualcomm, Intel): Have advocated the idea that “if America won’t sell advanced processors, China will simply build its own”

First Hand Experience: Mechanisms to Preserve Nvidia’s Monopoly

I learned firsthand how deep Nvidia's control runs when I worked at Intel. Policymakers and techbros regularly miss that Nvidia’s business model isn’t just selling chips, they’ve architected an entire AI ecosystem that is optimized for their hardware.

At Intel, I helped build TensorFlow, originally developed by Google. It is the second most-used machine learning framework. Many of the features in TensorFlows backend are built upon CUDA, Nvidia’s proprietary software layer. In short: a lot of features that work fine on an H100 won’t even run on an Intel chip. This means Intel, AMD and other hardware companies that want to run TensorFlow need to maintain their own versions of the software independently, and run their own software parallel universe. This is a never ending uphill battle to catch up to Nvidia that Intel will never win.

Software is at the center of Nvidia’s dominance: everyone in academia and industry exclusively uses and contributes to software built for Nvidia hardware, written in CUDA, which effectively cannot be run on non-Nvidia chips, widening their moat. By FLOPs, or raw compute power, Intel and AMD could compete with Nvidia today. However, when it comes to the software every machine learning engineer uses daily, it is ruthlessly optimized for and built upon Nvidia’s CUDA. This includes: TensorFlow, Pytorch, Sklearn, etc. and everything they’re built upon.

This is yet another reason why companies are so vulnerable to Nvidia’s influence. They don’t just control the hardware stack, they control the software stack.

“They'll Develop It Anyway”

What is Nvidia’s main argument for scrapping export controls altogether?

Nvidia CEO Jensen Huang argues: "The question is not whether China will have AI. It already does. The question is whether one of the world's largest AI markets will run on American platforms."

This is like saying: "China is developing nuclear weapons despite our restrictions, so we should sell them uranium."

As blogger Zvi Mowshowitz notes: "America should sanction our men's soccer team, too, so they will do better."

The fact that China is managing to develop AI despite restrictions isn't an argument for removing those restrictions, it's proof they're working.

Chip manufacturing profits are not the right metric to care about. Who has the compute is what matters.

The Bottom Line

If China could effectively access the best AI chips, that would get rid of what's arguably our biggest and most important advantage. Deciding to arms-race China might be a massive mistake, but if the self-fulfilling prophecy of AI arms race with China comes to fruition why would we give our strongest advantage to our adversary? We should not sacrifice our future on the altar of Nvidia’s stock price.

Despite tweeting about winning the AI arms race, we are deciding to lose it.

I first sought to write this up because I read a bunch of the public submissions to the AI Action Plan and thought I should at least turn my notes into something instead of just caching all those policy proposals until I forget them. I found something disturbing: Nvidia advocates that it should be allowed to sell to China openly, and hardly anyone in Washington seems to hold them accountable for this.

Others Have Noticed This

I started writing this a week or two ago. I forgot to post it on lesswrong.

Apparently CSIS is releasing a paper about Nvidia so I wanted to post this now (they have high quality content on export controls / compute: https://www.csis.org/analysis/limits-chip-export-controls-meeting-china-challenge)

Other Good Proposals in the AI Action Plan

Aside from export controls, there are other solid proposals I found in the AI Action Plan submissions that I don’t see very many people talking about. Here are two of them:

A key pillar in IAPS’s submission is denying foreign adversaries access to advanced computing technology. This is because AI models are national strategic assets: algorithms and weights are worth billions in R&D investments and competitive advantages could determine leadership for years to come. If stolen by adversaries, models could be replicated at a fraction of the training cost, because inference is far cheaper than training. The comment brings up RAND’s security levels, suggesting, "equivalent to SL4 and SL5 as outlined by the RAND Corporation.”

Recommendation 4 in the IFP’s submission is “A pilot highly secure data center” where they explain RAND’s framework for model weight protection. The IFP expands upon IAPS’s suggestion, saying “the DOD should build and operate a pilot SL4 AI cluster to develop best practices for securing sensitive AI workloads and models, and to develop next-generation AI-enabled national security applications.” This naturally extends IAPS’s security suggestions and concerns over foreign nationals stealing secrets from leading AI labs. It is also highly actionable.

Expand the AI Safety Institute

There is another silent battle over NIST’s AI Safety Institute, which currently houses almost all of the government’s AI capacity for model testing. Their papers are impressive, they’ve conducted interesting evals, and they are the source of most of the U.S. government's AI competence. The AISI is led by Paul Christiano, creator of RLHF, the invention that enabled ChatGPT. They are the organization that could set AI standards aligned with Western values.

Organizations including: Scale AI, the AI Futures Project, Anthropic, The Institute for AI Policy and Strategy, Center for AI Policy, RAND, .. (the list goes on) show support for NIST’s AI Safety Institute. This is sometimes via directional stance and sometimes via detailed policy proposals. On the other hand, A libertarian think tank (R Street, who I’ve never heard of) and Andreeson Horowitz recommend winding down AISI.

Release New Versions of Chinese AI Models

The Center for a New American Security suggests, “Rapidly releasing modified versions of Chinese open source AI that strips away hidden censorship mechanisms and ‘core socialist values’ required by Chinese AI regulation.”